AI Tools & Intellectual Property (IP) – Who Owns What?

Jerry Wallis

12 min read

In this age of AI and, more significantly, generative AI, where tools like ChatGPT seamlessly generate content, organisations find themselves grappling with a pivotal question: Who owns AI-generated content?

The use of AI, particularly ChatGPT, in content creation has sparked significant discussions about content custody and attribution. Some hail ChatGPT as a groundbreaking development, while concerns arise about accuracy, truthfulness, bias, and accountability in AI-generated materials.

Legal uncertainties also loom, especially regarding intellectual property and the potential takeover of work by AI platforms, particularly for those involved in content creation.

AI services have become ubiquitous in various organisational aspects, from internal presentations to marketing materials, automation, and content creation. However, as organisations increasingly leverage AI-generated content, complications arise regarding ownership, uniqueness, and proof of intellectual property.

Redefining The Creative Landscape – How Do Generative AI Tools Work? 🤖

AI image generators like Stable Diffusion, Midjourney, or DALL·E 2 demonstrate a remarkable ability to produce captivating visuals spanning various styles, from vintage photographs and watercolours to pencil drawings and Pointillism. The results are fascinating and distinguishable for their heightened quality and speed compared to typical human performance.

The capabilities of text generators, most notably ChatGPT, are equally remarkable, crafting essays, poems, marketing copy, and summaries while adeptly mimicking various styles and forms, even if they occasionally take creative liberties with facts.

While it might seem that these AI tools have the ability to conjure text-based output from thin air, the reality is slightly different. Generative AI platforms undergo training on vast data lakes and question snippets, processing billions of input parameters from extensive archives of images and text. These platforms discern patterns and relationships, creating rules and subsequently making judgments and predictions in response to prompts.

However, this brings us to the question at hand. Is this content creation process completely free from legal concerns? Are there any risks of intellectual property infringement?

Complex legal questions remain unresolved, such as the applicability of copyright, patent, and trademark infringement to AI creations. Determining ownership of content generated by these AI platforms also poses challenges. Before businesses can fully embrace the advantages of generative AI, a comprehensive understanding of these risks and strategies for protection is imperative. In this article, we’ll investigate where the copyright rules for AI-generated content are and where we can expect it to be in the future.

Attempting To Define Ownership Of AI-Content 🔖

The landscape of copyright law is undergoing seismic shifts as artificial intelligence (AI) continues to redefine the boundaries of creative expression.

Recent innovations in AI have triggered complex questions about applying traditional copyright principles, such as authorship, infringement, and fair use, to the realm of AI-generated content.

Generative AI tools, exemplified by ChatGPT, learn from external data, including potentially copyrighted material. The vast learning data includes publicly available content, making it challenging to pinpoint the valid owner of AI-generated content.

The content generated spans from graphics for presentations to copy for proposals or marketing materials. When this content is intended for publication or monetisation, proving ownership becomes essential, yet the unique challenges posed by AI-generated content make this a complex endeavour.

Why Is Attributing Ownership Of AI-Content More Challenging Than It Seems? 🫤

Lack of proper guidelines & regulations 👨⚖️

One key factor contributing to the complexity is the need for more specific laws and policies governing AI-generated content. Concerns surrounding image creation, voice cloning, and deepfakes have escalated as AI advances. These concerns touch upon sensitive issues such as impersonation, misinformation, and the need for regulations to address privacy, liability, copyright, and intellectual property related to generative AI tools.

Currently, active discussions among AI organisations and U.S. government entities aim to define these rules. However, the absence of concrete regulations adds an additional layer of uncertainty for organisations relying on AI-generated content.

AI generates content differently than humans do 🤖

The question of ownership becomes even more intricate when considering how generative AI tools operate and create content. ChatGPT, for instance, utilises publicly available content to teach itself and generate responses.

While the tool itself is not obligated to reference its sources, users interacting with AI-generated content often assume ownership. This assumption stems from users’ active roles in editing content for naturalness and fact-checking, ultimately leading to publication.

Humans, however, rely on education, personal experiences, and exposure to various forms of content. Learning for humans involves a combination of formal education, informal experiences, and interactions with the surrounding environment.

AI models and generative tools heavily rely on training data. The content generated is a product of patterns learned from vast datasets. The ownership question arises because the AI doesn’t create content from a personal standpoint but rather mimics patterns present in the data it was trained on. Throw the vagueness of the source data into the mix, and the concerns regarding the ownership of generated content compound even further.

The Authorship Conundrum ✍️

One of the core issues at the heart of the AI ownership and copyright debate is whether AI-generated content qualifies for copyright protection, a concept intricately tied to the notion of “authorship.”

U.S. copyright law, rooted in the idea of works being created by humans, faces a conundrum as advanced AI systems produce written works, images, music, and more without any human involvement.

The question persists: Can an AI system, operating autonomously without human intervention, be considered the author and owner of a work under copyright law?

Understanding The Legal Landscape of Generative AI 🚨

It’s important to remember that generative AI is a recent entrant in the market, and thus, existing legal frameworks have considerable influence over its utilisation. Courts are actively grappling with how to apply current laws, with issues ranging from infringement and rights of use to uncertainties about the ownership of AI-generated works.

Is Training Data The Real Problem? 🏋️♂️

Questions linger about unlicensed content within the input source and training data and whether users should be permitted to prompt these tools directly using copyrighted and trademarked works without explicit permission from creators.

Ongoing litigations, such as Andersen v. Stability AI et al., filed in late 2022, underscore the legal challenges with generative AI. In this case, artists are collectively suing generative AI platforms, alleging unauthorised use of their original works to train AI, resulting in potentially insufficiently transformative derivative works. The legal system is now tasked with defining the boundaries of a “derivative work” under intellectual property laws, with different federal circuit courts potentially offering varying interpretations.

Similar cases filed in 2023 involve claims that AI tools were trained using data lakes containing thousands or millions of unlicensed works. Getty, a prominent image licensing service, filed a lawsuit against Stable Diffusion’s creators, citing improper use of its copyrighted photos.

The outcomes of these cases hinge on the interpretation of the fair use doctrine, allowing copyrighted work to be used without permission for purposes such as criticism, comment, news reporting, teaching, scholarship, or research. The legal landscape is reminiscent of past clashes between technology and copyright law, such as Google’s successful defence in justifying the transformative use of book text for its search engine.

Amidst this uncertainty, businesses embracing generative AI face risks related to infringement and inadvertent contract breaches. Awareness of potential unlicensed works in training data or the generation of unauthorised derivative works is crucial to avoid willful infringement consequences.

What Does The Law Say Regarding IP Of AI-Generated Content? ⚖️

Legal complexities emerge regarding the broader distribution of AI-generated content, such as marketing materials or articles. As we’ve already established, the training data that AI uses is a potential ground for legal consequences. But, that said, the legal precedent for reusing content derived from or using the intellectual property of others remains to be determined.

Analysts like Bern Elliot from Gartner, a technological research and consulting firm based in Stamford, Connecticut, emphasise the need to understand the legal ramifications surrounding tools like ChatGPT.

Legal opinions on the matter highlight several key points. Firstly, ChatGPT tends not to include citations or attributions to sources, raising questions about intellectual property perspectives.

However, according to Michael Kelber, co-chair of Neal Gerber Eisenberg’s IP practice, if the source material is not specifically quoted, there may be no citation requirement, though attribution would be beneficial for identifying biases and credibility. He goes on to say that an absence of proper citations takes away from the credibility of the research but may not be necessary in other cases.

Who Owns AI-Generated Content? Let’s Hear What The Experts Have To Say 🤓

The question of copyright amidst the concerns surrounding ownership of AI-generated works is another complex issue. Current U.S. law requires creative authorship by a human for a work to enjoy copyright protection.

The challenge arises in determining whether the requester using the AI tool or the AI developer, like OpenAI, can claim ownership. Margaret Esquenet, partner with Finnegan, Henderson, Farabow, Garrett & Dunner, LLP, notes that the human authorship standard poses challenges in the context of AI-created works, potentially leading to the dedication of such works to the public domain.

In the event of an intellectual property challenge, the human authorship prerequisite becomes crucial. Esquenet also suggests that an AI-created work may either become a public domain work or a derivative work dependent on various factors, including the origin of the training dataset and the level of similarity between the AI work and materials in the training set.

Addressing the issue of AI-generated content being identical for different users, Esquenet explains that independent creation is a complete defence against copyright infringement claims. A copyright infringement claim is unlikely to succeed if two parties independently generate the same work.

The question of ownership regarding AI-generated content has been a hot topic of debate in our company as well (that’s partly why this blog exists). To provide his thoughts on this subject, our CEO, Julian Wallis, just dropped a video on his LinkedIn profile. But, you don’t need to go anywhere because we’ve got the entire post right here for you to check out.

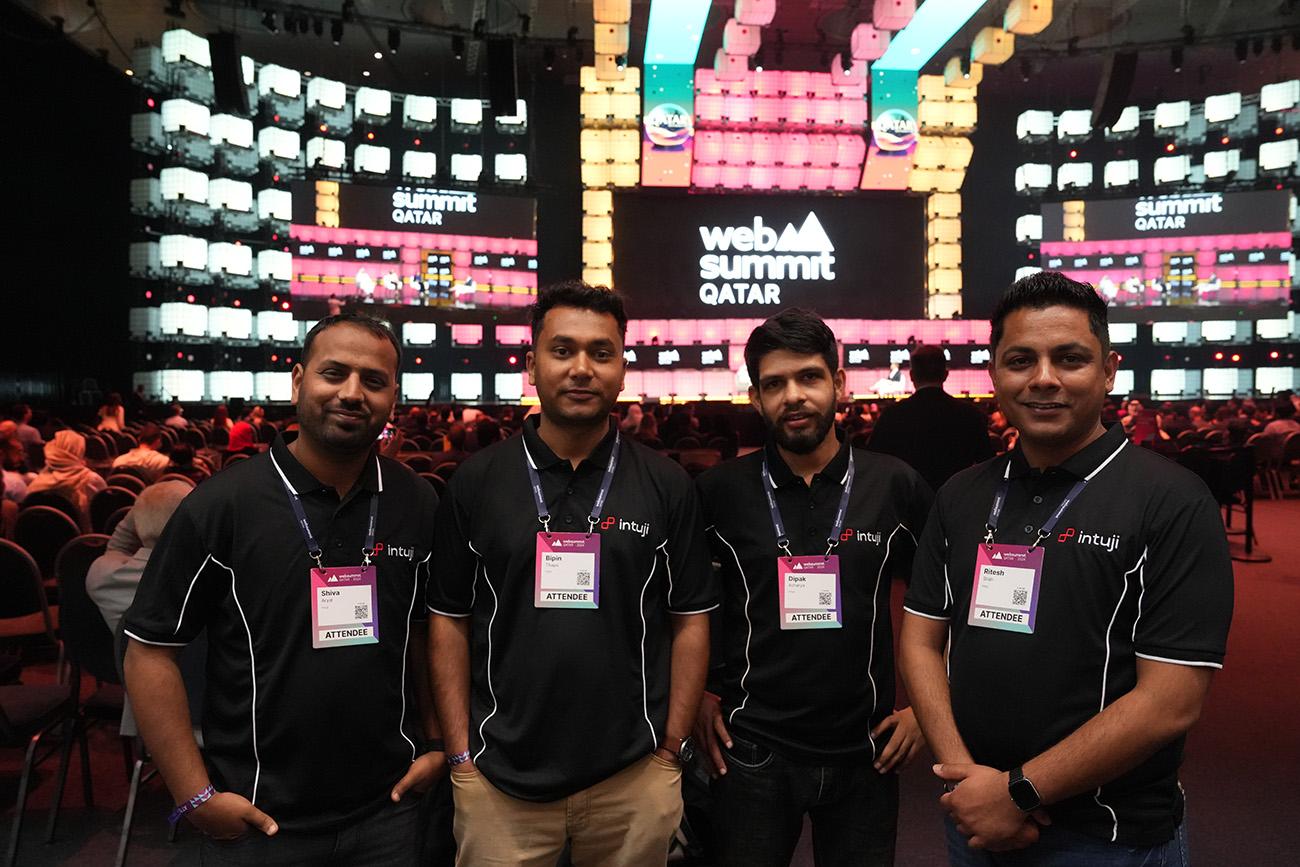

We also spoke with our CIO, Ritesh Shah, about this exact topic, and he shared the viewpoints of Kelber and Esquenet. Below is his perspective on this matter.

“ We are venturing into an uncharted territory as there are no established legislations to steer our understanding, especially in relation to copyright and ownership. Think of the user’s prompt as their idea; it makes sense that what the AI creates belongs to them. It’s not exactly, but in some way, similar to how designers use tools like Photoshop to make things – what they make is theirs.

Even though AI doesn’t have creativity or an entitlement to ownership, it still makes sense to say the user owns what it creates. The tricky part, though is without clear rules, it is always difficult to figure out who really owns what the AI makes.”

Citations Are Key With AI-Generated Content 🔏

The question of liability for damaging content created by AI also arises. Michael Kelber questions whether the blame should fall on the creator of the AI, such as ChatGPT, or the user who posed the query. Regarding citing AI-generated content, primarily when used in articles or papers, the consensus is that proper citation is essential.

Kelber suggests citing ChatGPT as the source, acknowledging its contribution to the generated response. This ensures transparency and accountability in the use of AI-generated content.

So, What Does This Mean For Professionals & Businesses? 🤝

It’s clear that amidst this uncertainty, all parties involved in this must be proactive to mitigate the potential legal risks of using generative AI technologies. Who are these individuals, you ask? Well, it’s clearly AI developers, end users, and business owners.

Companies and business owners must proactively protect themselves in the short and long term by enforcing the usage of AI within their organisation following legal best practices. But, first things first, AI developers should ensure compliance with laws when acquiring data for model training, compensating IP owners appropriately to set things off on the right path from the get-go. On the other hand, customers of AI tools should scrutinise terms of service and privacy policies and confirm proper licensing of training data.

Developers need to adopt transparent practices, recording the provenance of AI-generated content to include details on the platform, settings, seed data’s metadata, and tags for easy verification. This facilitates content reproduction and demonstrates the user’s intent, protecting against intellectual property infringement claims.

Content creators should actively monitor digital channels for derivative works and examine the risk to their intellectual property portfolios. Brands must evolve trademark and trade dress monitoring to include the examination of stylistic elements in AI-generated works.

Businesses should incorporate protections into contracts, ensuring AI platforms confirm proper licensure of training data and providing indemnification for potential IP infringements. Contract modifications should include AI-related language in confidentiality provisions, barring the use of confidential information in AI tools’ text prompts.

Looking ahead, content creators can build their datasets, utilising open-source generative AI that lawfully sources content. Co-creation with followers may be explored, with updated terms of service and privacy policies reflecting legal changes.

Conclusion – AI & IP 😎

As the legal landscape for AI-generated content evolves, these insights glimpse the complexities surrounding intellectual property, ownership, and accountability.

As organisations and individuals increasingly rely on AI tools like ChatGPT, navigating these legal nuances becomes crucial for ethical and responsible content creation.

Ultimately, Generative AI holds transformative potential, but respecting the rights of content creators and implementing proactive measures is essential to navigate legal complexities and unlock its true value. If you found value in this article, we invite you to explore further insights at our learning centre, our curated collection of in-depth content covering various aspects of modern technology.

Topics

Published On

January 22, 2024